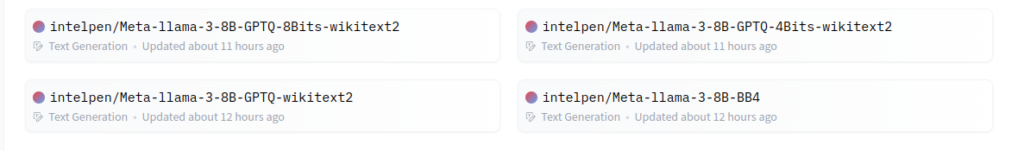

Download our quantized models on huggingface at https://huggingface.co/intelpen

We have models generated with GPTQ – on 4 and 8 bits which were finetuned on wikitext.

We have also Bits & Bytes 4 Bit versions(no finetuning).

Import them using huggingface AuttLLMCasual constructor. In the current version they are blazing fast, the run in les than 7GB of memory (you can run them on almost any modern nvidia GPU).

The performance is lower then full LLama3, still better than most of the other 8B params LLMs.

*These are now public models under MetaLlama3 license